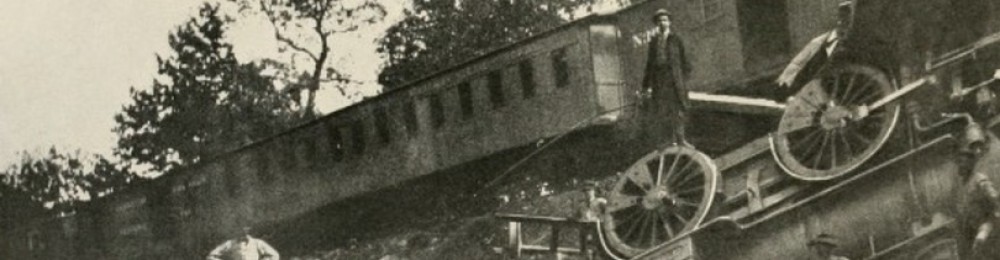

Courtesy of Jim Harris at the excellent OCDQBlog.com comes this classic example of a real life Information Quality Trainwreck concerning US Healthcare. Keith Underdown also sent us the link to the story on USAToday’s site

It seems that 1800 US military veterans have recently been sent letters informing them that they have the degenerative neurological disease ALS (a condition similar to that which physicist Stephen Hawking has).

At least some of the letters, it turns out, were sent in error.

[From the LA Times]

As a result of the panic the letters caused, the agency plans to create a more rigorous screening process for its notification letters and is offering to reimburse veterans for medical expenses incurred as a result of the letters.

“That’s the least they can do,” said former Air Force reservist Gale Reid in Montgomery, Ala. She racked up more than $3,000 in bills for medical tests last week to get a second opinion. Her civilian doctor concluded she did not have ALS, also known as Lou Gehrig’s disease.

So, poor quality information entered a process, resulting in incorrect decisions, distressing communications, and additional costs to individuals and governement agencies. Yes. This is ticking all the boxes to be an IQ Trainwreck.

The LA Times reports that the Department of Veterans Affairs estimates that 600 letters were sent to people who did not have ALS. That is a 33% error rate. The cause of the error? According to the USA Today story:

Jim Bunker, president of the National Gulf War Resource Center, said VA officials told him the letters dated Aug. 12 were the result of a computer coding error that mistakenly labeled the veterans with amyotrophic lateral sclerosis, or ALS.

Oh. A coding error on medical data. We have never seen that before on IQTrainwrecks.com in relation to private health insurer/HMO data. Gosh no.

Given the impact that a diagnosis of an illness which kills affected people within an average of 5 years can have on people, the simple coding error has been bumped up to a classic IQTrainwreck.

There are actually two Information quality issues at play here however which illustrate one of the common problems in convincing people that there is an information quality problem in the first place . While the VA now estimates (and I put that in bold for a reason) that the error rate was 600 out of 1800, the LA Times reporting tells us that:

… the VA has increased its estimate on the number of veterans who received the letters in error. Earlier this week, it refuted a Gulf War veterans group’s estimate of 1,200, saying the agency had been contacted by fewer than 10 veterans who had been wrongly notified.

So, the range estimates for error goes from 10 in1800 (1.8%) to 600 in 1800 (33%) to 1200 in 1800 (66%). The intersting thing for me as an information quality practitioner is that the VA’s initial estimate was based on the numberof people who had contacted the agency.

This is an important lesson.. the number of reported errors (anecdotes) may be less than the number of actual errors and the only real way to know is to examine the quality of the data and look for evidence of errors and inconsistency so you can Act on Fact.

The positive news… the VA is changing its procedures. The bad news about that… it looks like they are investing money in inspecting defects out of the process rather than making sure the correct fact is correctly coded in patient records.

Daragh,

Reminds me of an issue I read about that I highlighted in my Master’s thesis:

“During a blackout the week of July 17, 2006, ConEd estimated the number of customers without power at 2,500, which was reported to the media. Days later, estimates went up to 100,000 without power. When this larger number became public, customers were furious [i] that the company could not reliably estimate the effect of the blackout, and [ii] that it took so long to restore power. The original figure was based on the number of complaints the company had received, while the updated number was an estimate of the number of actual people without power. There was a belief that ConEd’s lax response was due to assuming the problem was not as widespread as it was. A number from one context was used inappropriately in a different context, leading to a lower level of action that resulted in angry customers.”

Cheers,

Eric

Eric

We are veering into the wonderful world of ACCURACY when we start talking about situations like this. It is clear that in both the VA case and the ConEd case that they took the number of complaints to be an accurate indicator of the actual number of errors.

It’s an unfortunate aspect of human nature that we will always look for what I call “the happy path” and will latch on to numbers which are supportive of that. In my old job I was more often asked to recheck figures that had a negative impact than ones that supported the “Happy Path Delusion”. Of course, there was that one time when the horrifying impact figure I presented actually was the Happy Path, but that’s another story.

A better view might have been that (applying Poisson distribution) more than one defect reported suggests that there are likely to be more in your data and you should really look a bit closer at the actual level of errors before breathing a sigh of relief.