In a taste of the change of emphasis that is seeping through the global financial services industry, the Irish Financial Services Regulatory Authority is pursuing 24 cases of overcharging by banks and insurance companies, according to this morning’s Irish Independent

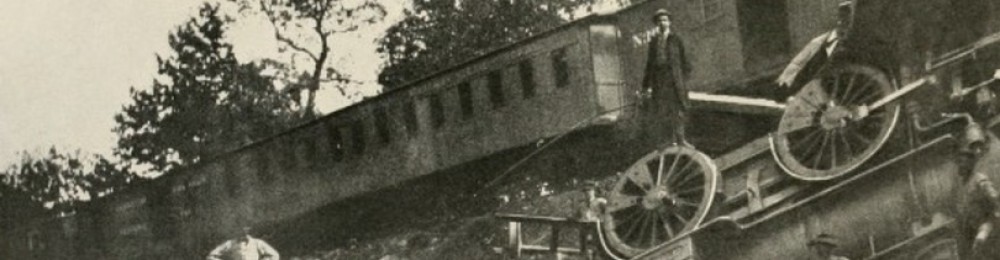

Of course, stories of financial services overcharging and other information quality disasters in that industry are not new to the IQTrainwrecks reader. Over the years we’ve covered them here, here, here, here, here , here, here, and here (to select just a few).

We’ve also covered the growing “hard touch” trend in Financial Services that is bringing a clear “cost of non-quality” to bear on banking/financial services processes (see this post from August of last year).

Why is this now an IQTrainwreck again?

- Regulators are adopting a tougher line with banks about overcharging/undercharging (a bit like the regulators did in my former industry – telecommunications).

The new chief of the Irish Financial Services regulator is concerned about the number of overcharging cases and recently said that:

It is clear from recent cases that change is needed in how firms handle charging and pricing issues.

- Financial services companies, facing into severe cutbacks in budgets and man power are potentially increasingly exposed to the risks of manual work arounds in processes simply stopping, end-user computing controls not being run, and ultimately inaccuracies and errors creeping into the information they hold about the money they hold for or have loaned to customers.

As the regulatory focus shifts from ‘light touch’ to ‘velvet fist’, those financial services companies who invest in appropriate strategies for managing the quality of information in a culture of quality will be best placed to avoid regulatory penalties.